Dongyeop Lee

I am a second-year Master’s student in the Graduate School of Artificial Intelligence at POSTECH, under the guidance of Professor Namhoon Lee.

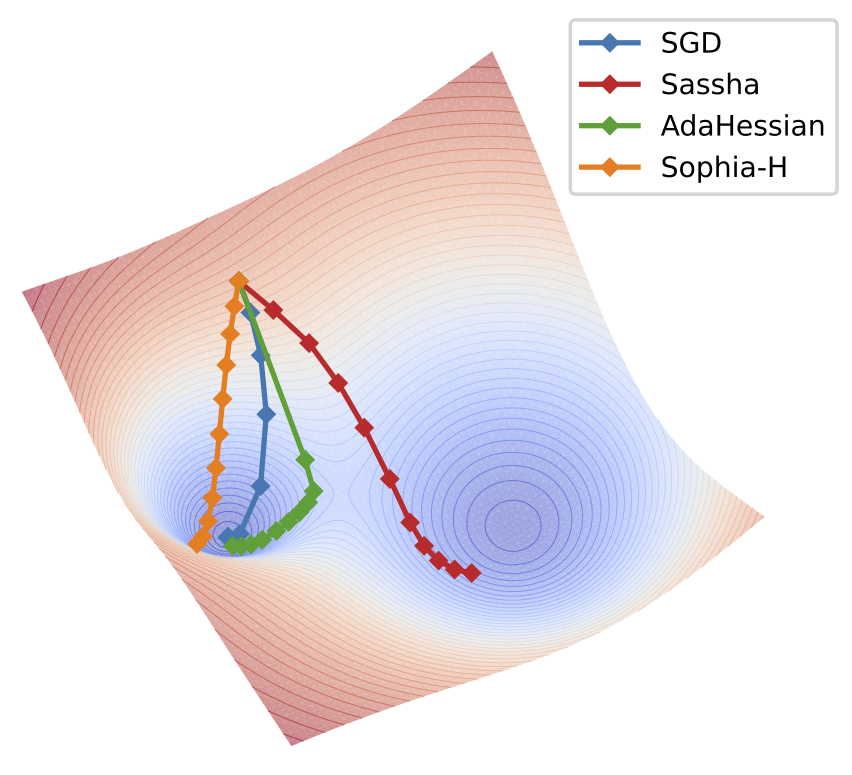

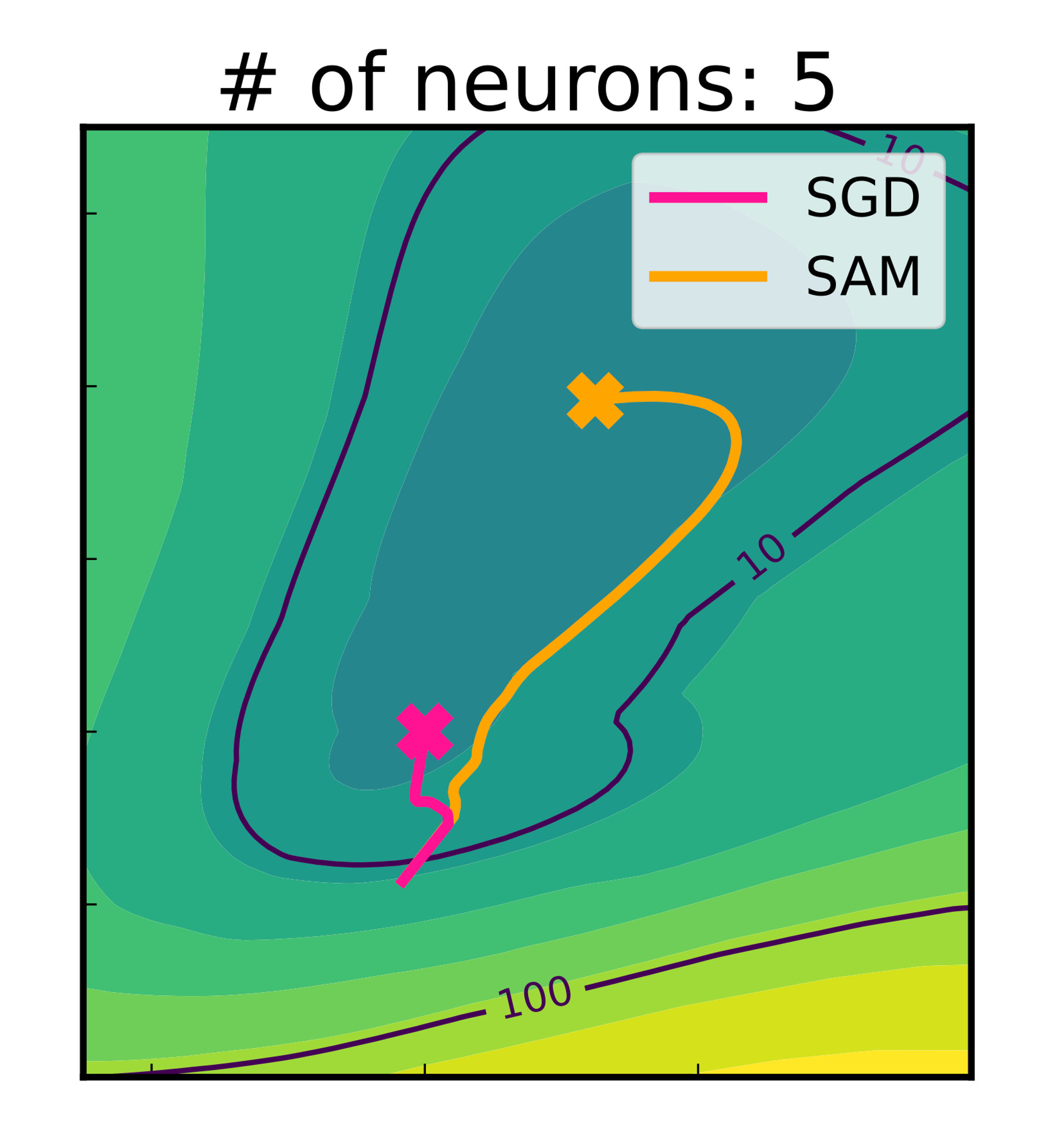

I am broadly interested in challenges arising from large-scale machine learning, particularly in understanding and addressing them through the lens of optimization. My prior work has focused on neural network compression and (implicit) regularization by sharpness minimization. Recently, I have been exploring these topics for Large Language Models (LLMs), aiming to develop efficient compression and optimization techniques.

If you have any questions, contact me at dongyeop.lee2@postech.ac.kr.

news

| May 9, 2025 | One paper has been accepted to UAI 2025 🇧🇷: “Critical Influence of Overparameterization on Sharpness-aware Minimization”. |

|---|---|

| May 5, 2025 | Two papers have been accepted to ICML 2025 🇨🇦: SAFE and Sassha. |

| Sep 2, 2024 | Excited to be working as a student researcher at Google for the next 12+ weeks! |

| Nov 24, 2023 | Our new paper on the effects of overparameterization on sharpness-aware minimization won the best paper award in JKAIA 2023! (related news) |

| Oct 25, 2023 | Happy to release 🔨Malet: a Machine Learning Experiment Tool, available in pip! |